Starostenko A.; Kozin F.; Gorbachev R.

Abstract — We introduce a set of real-time algorithms for head mounted gaze tracker consisting of three cameras: two cameras for the eyes and one camera for the scene. The direction of theoptical axis of the eye in three-dimensional space is calculated using the reflection of IR LEDs from the cornea. Individual features of the user are taken into account using the short-term calibration procedure. The described algorithms combine high accuracy in determining the point of gaze with high speed.

The procedure for determining the point of gaze consists of thefollowing algorithms:

estimation of the position of the pupils on the eye cameras frames using of the threshold processing taking into account the histogram of the frame and further approximation of the pupil by an ellipse;

estimation of the IR LEDs glare position on the frames of the eye cameras using threshold processing;

filtration of the glares by brightness, size, circularity, and of the glares beyond the iris, the size of the iris is estimated by the distance from eye camera to pupil position calculated on the previous frame;

indexation of the glares with the template matching;

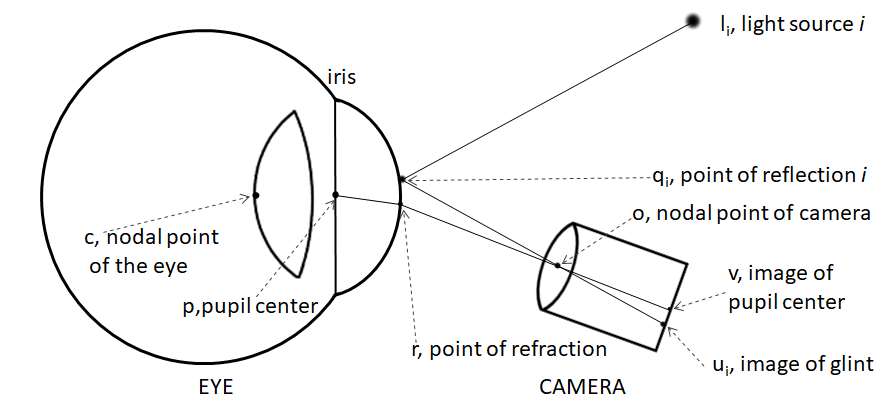

estimation of the optical axis angles of the eye using a spherical model of the cornea with the nonlinear optimization methods;

estimation of the point of gaze on the scene camera frame usingindividual user features found during the calibration process.

During calibration, the movement of the ArUco calibration mark and its selection on the scene camera frame are used. To calculate the gaze position on the scene camera, a regression algorithm is used, which implicitly takes into account the individual characteristics of the user.

I. INTRODUCTION

Different methods of pointing at objects were available to humanity since ancient times. At pre-digital era, people were pointing with hands, pointers, or voice. With the emerging of digital devices a computer mouse and a sensor screen have been added to these common methods. In recent years, there has been a greater effort to explore such new pointing methods as brain-computer interfaces [1] or point of gaze [2]. Price and usability for new pointing methods are also actual problems.

Approaches to determining the point of gaze have been studied in laboratory conditions since the end of the 19thcentury using wearable lenses, IR LED reflections (glints), stationary and wearable devices, usually called trackers. Nowdays, a number of wearable trackers have been developed [3]. The area of application of trackers is very extensive: neuroscience, psychology, industrial engineering, marketing, computer science [4].

This article presents a set of algorithms for determining the point of gaze in real time for a head mounted tracker consisting of three cameras: two cameras for the eyes and one scene camera. One of the most important tasks during the creation of the algorithm set was to obtain the high performance, which in turn allows one to reduce the cost of the device, as well as its power consumption and heat dissipation.

The article is organized as follows: section 2 discusses a set of algorithms used to determine the point of gaze, and section 3 presents the test results.

![]()

Fig. 1. Algorithm dependency diagram.

This article presents a set of algorithms for determining the point of gaze in real time for a head mounted tracker consisting of three cameras: two cameras for the eyes and one scene camera. One of the most important tasks during the creation of the algorithm set was to obtain the high performance, which in turn allows one to reduce the cost of the device, as well as its power consumption and heat dissipation.

The article is organized as follows: section 2 discusses a set of algorithms used to determine the point of gaze, and section 3 presents the test results.

![]()

Читать статью полностью Real-Time Algorithms for Head Mounted Gaze Tracker